Prisma 7 shipped on November 19th. Since then, we have heard a lot from the community. Many of you love the new setup and the move away from Rust, while others have raised concerns about performance. This post is meant to be a transparent overview of what is happening and how we’re going to address it.

Is Prisma 7 slow?

The most common question we have seen is simple:

“Why does Prisma 7 feel slower than Prisma 6?”

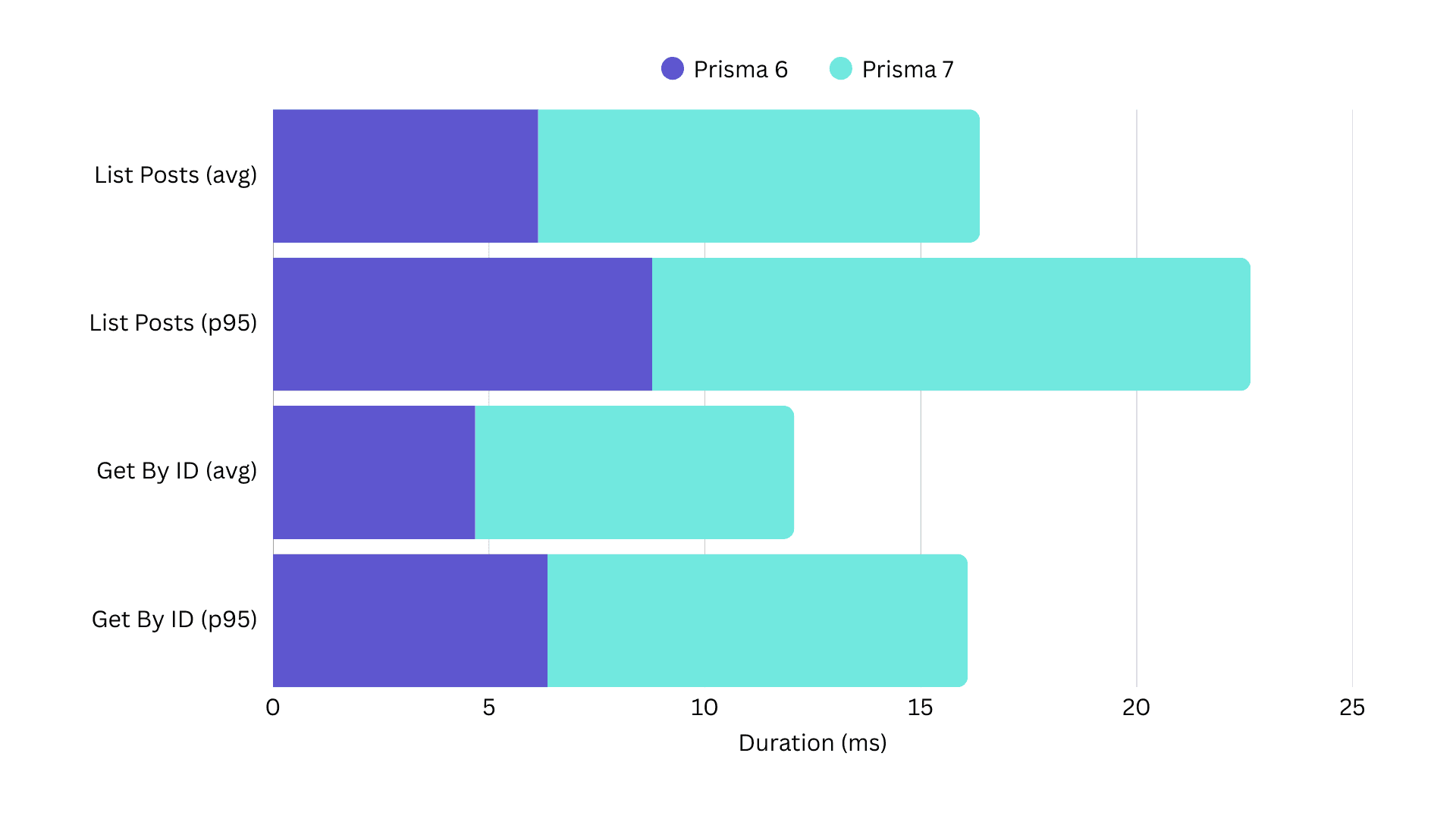

Some benchmarks show Prisma 7 running slower than other ORMs and even slower than Prisma 6 in some cases. If you have seen only those graphs, the confusion is understandable. We have reviewed every issue, comment, and benchmark the community shared. The most referenced one is here. It is a detailed test suite that compares Prisma 6 and Prisma 7. In those tests, Prisma 7 fall behind.

But before drawing conclusions, we need to look at what these tests are actually measuring.

What is in a benchmark?

Benchmarks matter, but only when the workloads they test match real usage. The benchmarks above runs hundreds of tiny queries in a tight loop with no breaks and no real-world delays.

In this specific setup, Prisma 7 has a regression. We want to be clear about that.

Why does it happen?

In these tiny queries, Prisma performs a small compilation step that is needed before running the SQL. If you repeat this work continuously, eventually the overhead stacks up. It’s not a matter of whether performance will get worse, only when.

Microbenchmarks like this amplify this because they don’t actually represent a real world situation, where queries would not be continuously ran over a short period of time. In most real-world applications, there are network requests, user input, background tasks, and other natural pauses. These spread out the pre-query work that microbenchmarks compress into a single block of time.

Still, a regression is a regression. We are fixing it.

What Prisma 7 was designed to improve

In the Prisma 7 launch announcement, we talked about seeing up to 3x faster queries. So how does that fit with reports of slower performance? It depends on what you measure.

When we looked at how people use Prisma in production, one common problem was that queries returning large result sets were slow. This slowdown was often caused by the handoff between Rust and JavaScript. With the move away from Rust, Prisma 7 resolves that issue. The result is a significant improvement for large queries. In many of our tests, these queries completed up to three times faster than before.

So the full picture is this:

- Prisma 7 gives major improvements for large result sets

- Prisma 7 has a known regression for many repeated tiny queries in microbenchmark loops

Both statements are true. Both matter. And both guide what we are working on.

What comes next

Ok, so what are we doing to address this? This is the plan we have for address the performance issues and how we expand how we measure Prisma in the future.

Now

- Identify the parts of Prisma 7 that are subject to latency and bottle necks

- Optimizing the parts of Prisma 7 that these benchmarks stress

Next

- Testing regressions against community-created test suites, not only internal ones

- Sharing updated numbers as improvements land

Future

- Develop more varying benchmarks with community feedback

- Introduce more automated tests to constantly measure performance

Performance is important to us, and transparency is as well. We want to make sure that we understand the problem and have a clear path forward that addresses people’s comments and concerns. If you have shared benchmarks or feedback, thank you! Your examples have been incredibly helpful, and we will continue updating you as fixes roll out.

Be sure to follow us on social media to stay up to date with all the latest release of Prisma ORM and Prisma Postgres

Don’t miss the next post!

Sign up for the Prisma Newsletter