Learn how MCP works by following the practical example of how we built the Prisma MCP server, including the tradeoffs between local and remote MCP servers, the @modelcontextprotocol/sdk package, and how we enabled LLMs to use the Prisma CLI.

Understanding MCP

Before diving into the technical details of how we built the Prisma MCP server, let's take a quick step back and understand MCP servers from the beginning.

What if an LLM needs access to proprietary data or systems?

LLMs are trained on information from the world wide web and can provide accurate answers to even the most esoteric questions that have been discussed somewhere on the internet.

But what if you want your LLM to answer questions based on proprietary data or systems? Or to perform some kind of action on your behalf? Imagine prompting ChatGPT with the following:

- "Find all the invoices from last year on my file system."

- "Create a new database instance in the

us-westregion for me." - "Open a new GitHub issue in a specific repo."

A purely internet-trained LLM won't be able to help with these because it doesn't have access to your file system, your database provider or the GitHub API.

"Tool access" enables LLMs to interact with the outside world

In such scenarios, an LLM needs additional capabilities to interact with the "outside world"—to go beyond its knowledge of the web and perform actions on other systems.

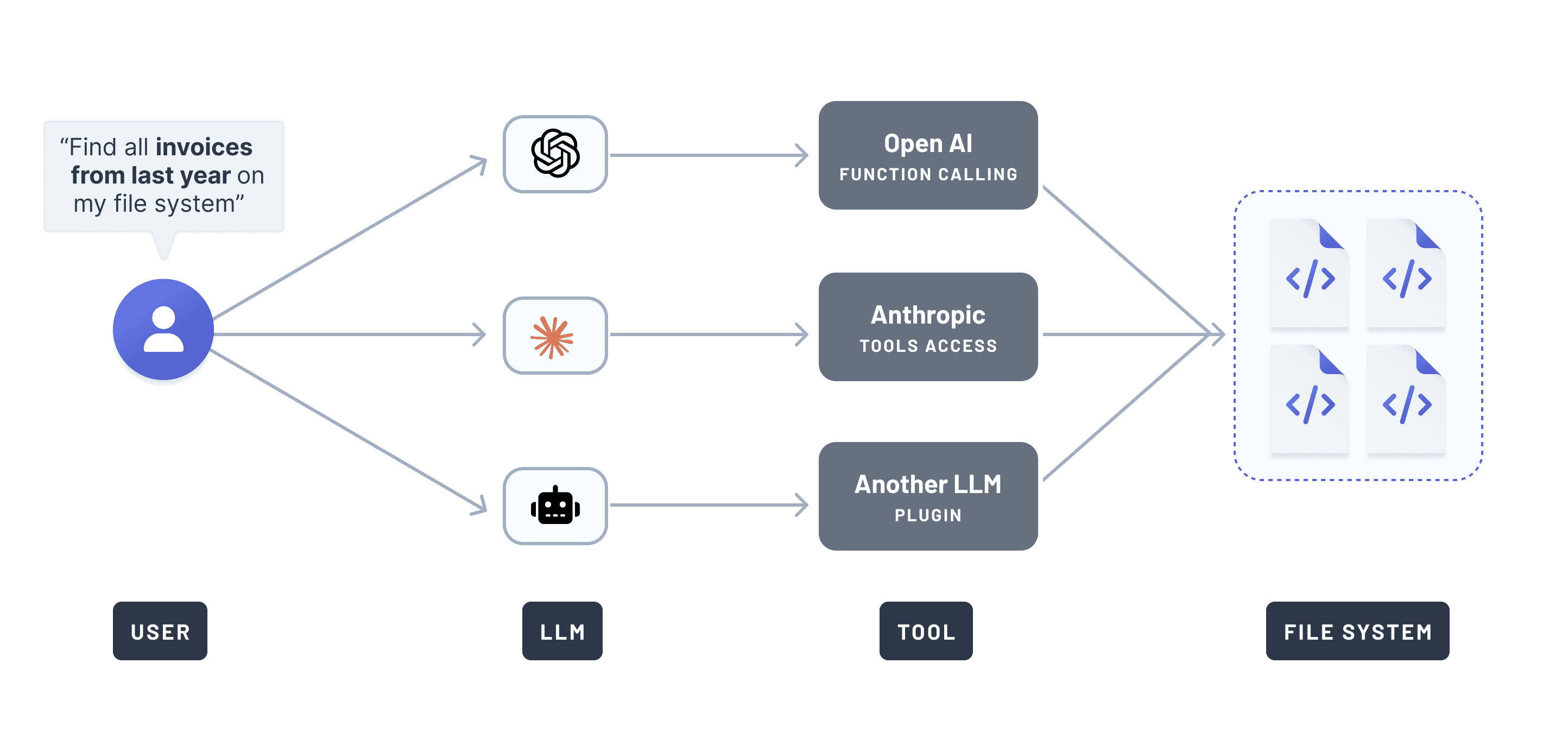

LLM providers have responded to this need by implementing so-called tool access. Individual providers use different names for it: OpenAI calls it function calling, Anthropic refers to it as tool use, and others use terms like "plugins" or "actions."

This approach was messy because each LLM had a different interface for interacting with tools.

For example, if you wanted multiple LLMs to access your file system, you would need to implement the same "file system access tool" multiple times, each tailored to a specific LLM. Here's what that might have looked like:

With new LLMs popping up left and right, you can imagine how chaotic this will become if every LLM has its own, proprietary interface for accessing the outside world.

Introducing MCP: Standardizing tool access for LLMs

In November 2024, Anthropic introduced the Model Context Protocol (MCP) as a:

new standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments.

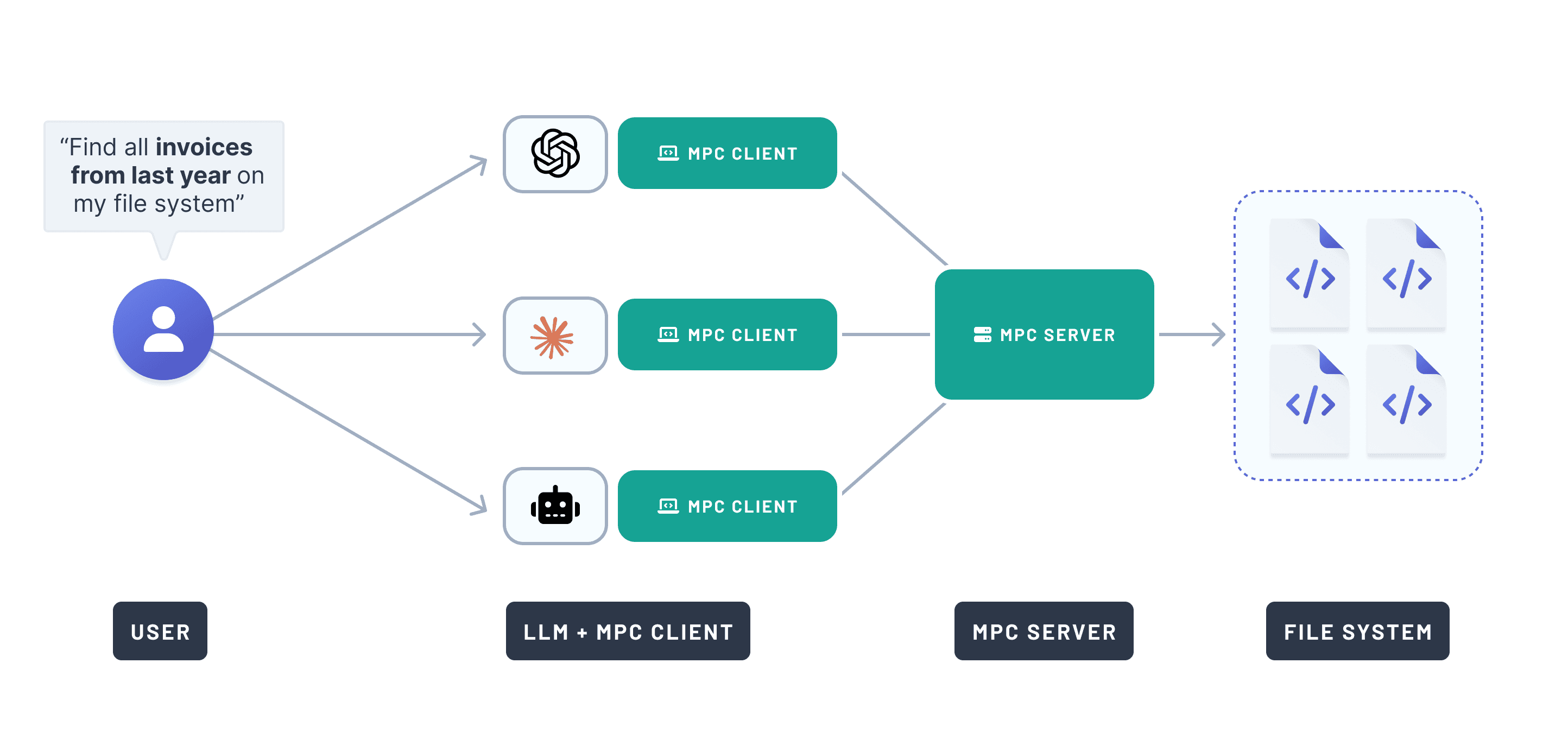

MCP provides a universal, open standard for connecting AI systems with external data sources. All LLMs that implement the MCP protocol can now access the same functionality if it's exposed through an MCP server.

Returning to the previous example: With MCP, you only need to implement the invoice search functionality once. You can then expose it via an MCP server to all LLMs that support the MCP protocol. Here's a pseudocode implementation:

Anthropic clearly struck a chord with this standard. If you were on X at the time, you probably saw multiple MCP posts a day. Google Trends for "MCP" tell the same story:

How to connect an LLM to an MCP server

All you need to augment an LLM with MCP server functionality is a CLI command to launch the server. Most AI tools accept a JSON configuration like this:

The AI tool runs the command, passes the args, and the LLM gains access to the server's tools.

Building the Prisma MCP server

At Prisma, we've built the most popular TypeScript ORM and the world's most efficient Postgres database running on unikernels.

Naturally, we wondered how we could use MCP to simplify database workflows for developers.

Why build an MCP server for Prisma?

Many developers use AI coding tools like Cursor or Windsurf when building data-driven apps with Prisma.

These AI coding tools have so-called agent modes where the AI edits source files for you, and you simply need to review and accept the suggestions made by the AI. It can also offer to run a CLI command for you, and like with file edits, you need to confirm that this command should actually be executed.

Since many interactions with Prisma Postgres and Prisma ORM are driven by the Prisma CLI, we wanted to make it possible for an LLM to run Prisma CLI commands on your behalf, e.g. for these workflows:

- Checking the status of database migrations

- Creating and running database migrations

- Authenticating with the Prisma Console

- Provisioning new Prisma Postgres instances

Before MCP, we would have had to implement support separately for each LLM. With MCP, we can implement a single server that supports all of them at once.

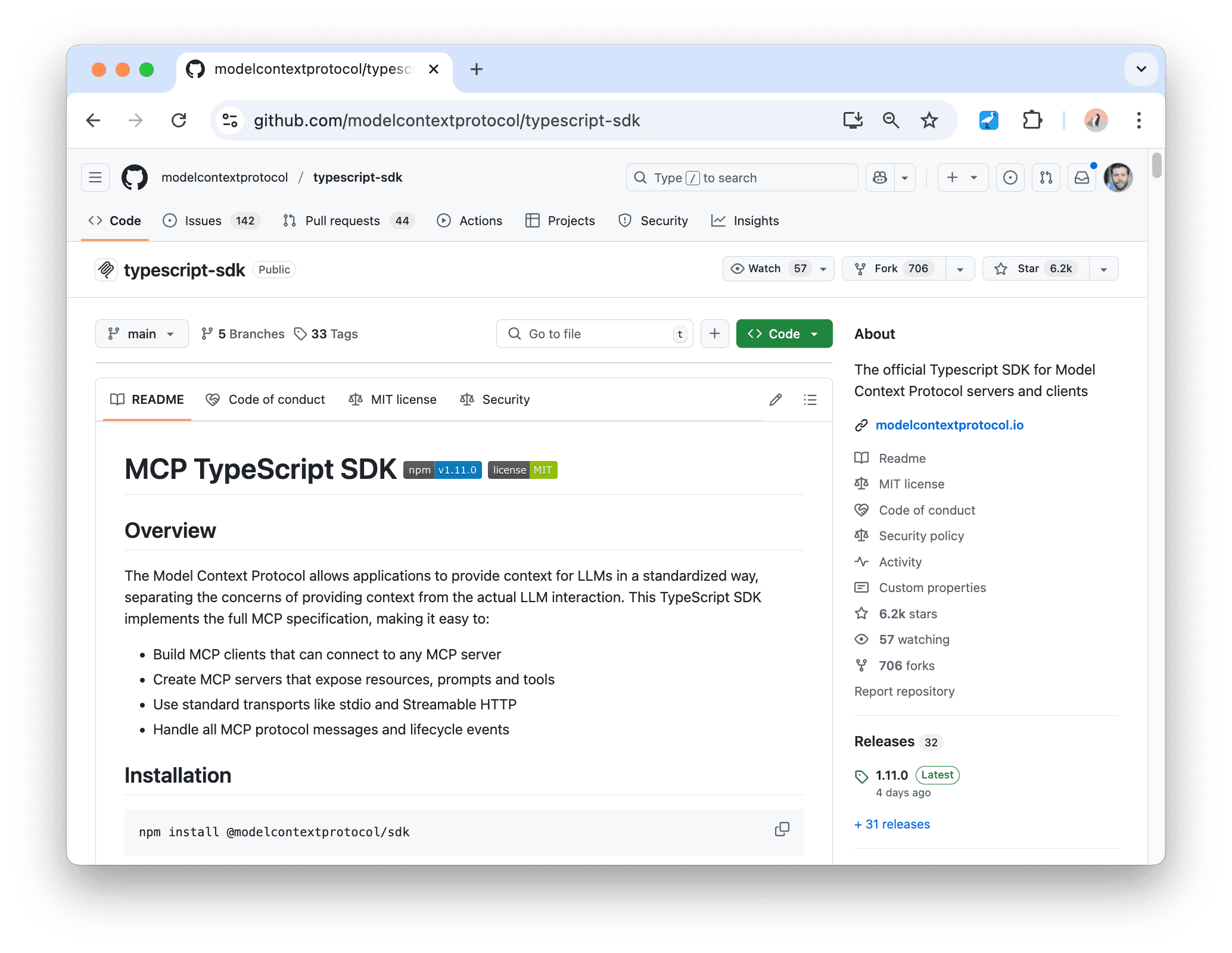

The @modelcontextprotocol/sdk package: "Like Express for MCP"

When introducing MCP, Anthropic released SDKs for various languages. The TypeScript SDK lives in the typescript-sdk repository and provides everything needed to implement MCP clients and servers.

Local vs. remote MCP servers

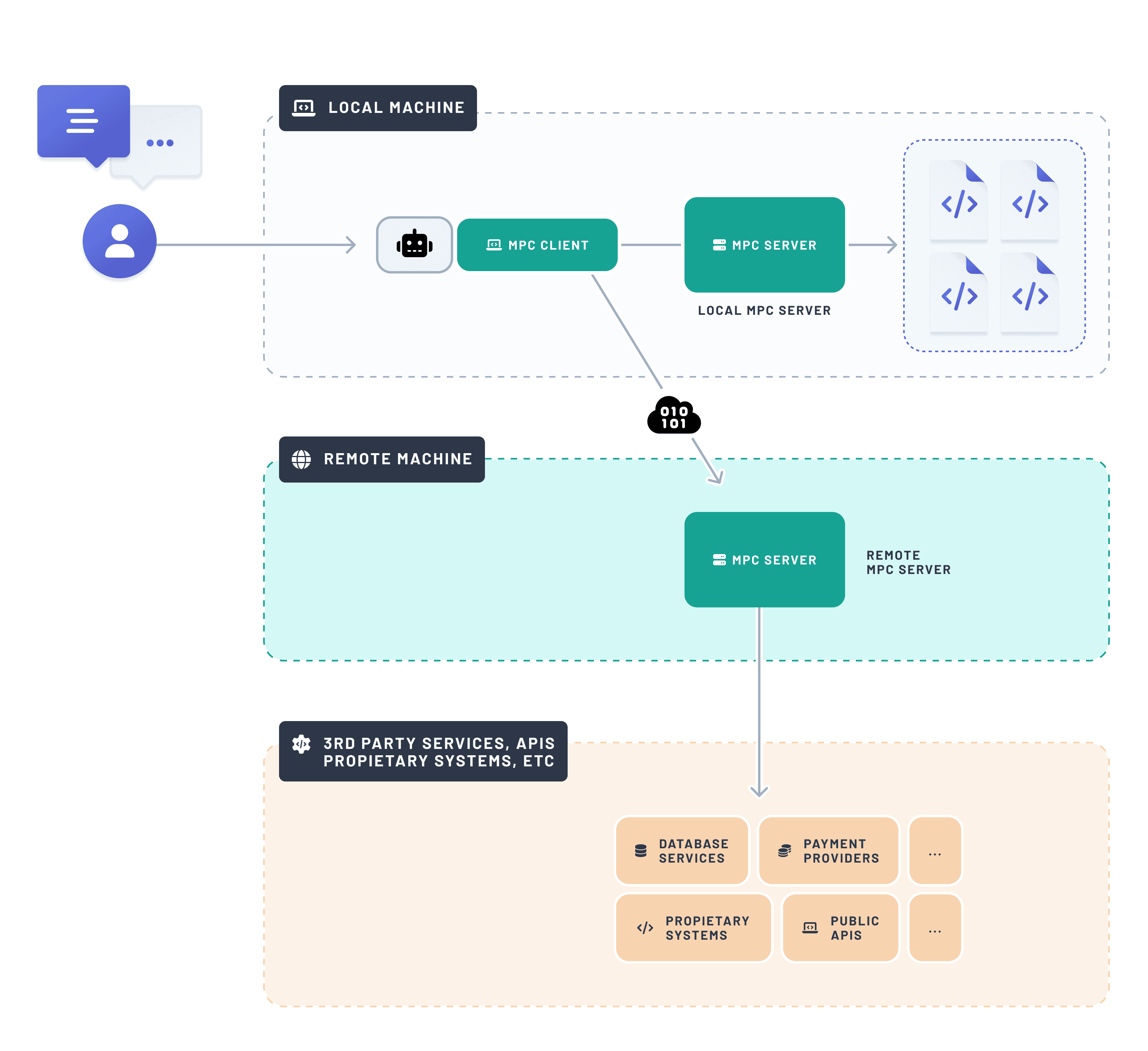

When building an MCP server, you must decide if it runs locally (on the same machine as the user) or remotely (on a machine accessible via the internet).

This depends on what the server does. If it needs access to the user's file system, it must run locally. If it just calls APIs, it can be local or remote (because an API can be called both from a local and remote machine).

In the case of Prisma, the LLM primarily needs access to the Prisma CLI in order to support developers with database-related workflows. The Prisma CLI may connect to a local or a remote database instance. However, because the CLI commands are executed locally, the Prisma MCP server also must be local.

Enabling LLMs to call Prisma CLI commands

The Prisma MCP server is quite simple and lightweight—you can explore it on GitHub. It has been packaged as part of the Prisma CLI and can be started with the following command:

Here's its basic structure:

The parse function is being executed when the prisma mcp --early-access CLI command is invoked. It starts an MCP server that uses the StdioServerTransport (as opposed to StreamableHTTPServerTransport) because it’s running locally.

What’s not shown in the above snippet is the actual implementation of the CLI commands, let’s zoom into the parse function and look at the prisma migrate dev and prisma init --db commands as examples:

Each tool is registered via server.tool() with:

- A name (so the LLM can reference it)

- A description (to help the LLM understand its purpose)

- An argument schema (we use

zod) - A function implementing the logic

Our implementation of all tools follows the same pattern and is quite straightforward: When a tool is invoked, we simply spawn a new process (via the runCommand function which uses execa) to execute the CLI command that belongs to it. That's all you need to enable an LLM to invoke commands on behalf of users.

Try the Prisma MCP server now

If you're curious to try it, paste this snippet into the MCP config section of your favorite AI tool:

Or check out our docs for specific instructions for Cursor, Windsurf, Claude, or the OpenAI Agents SDK.

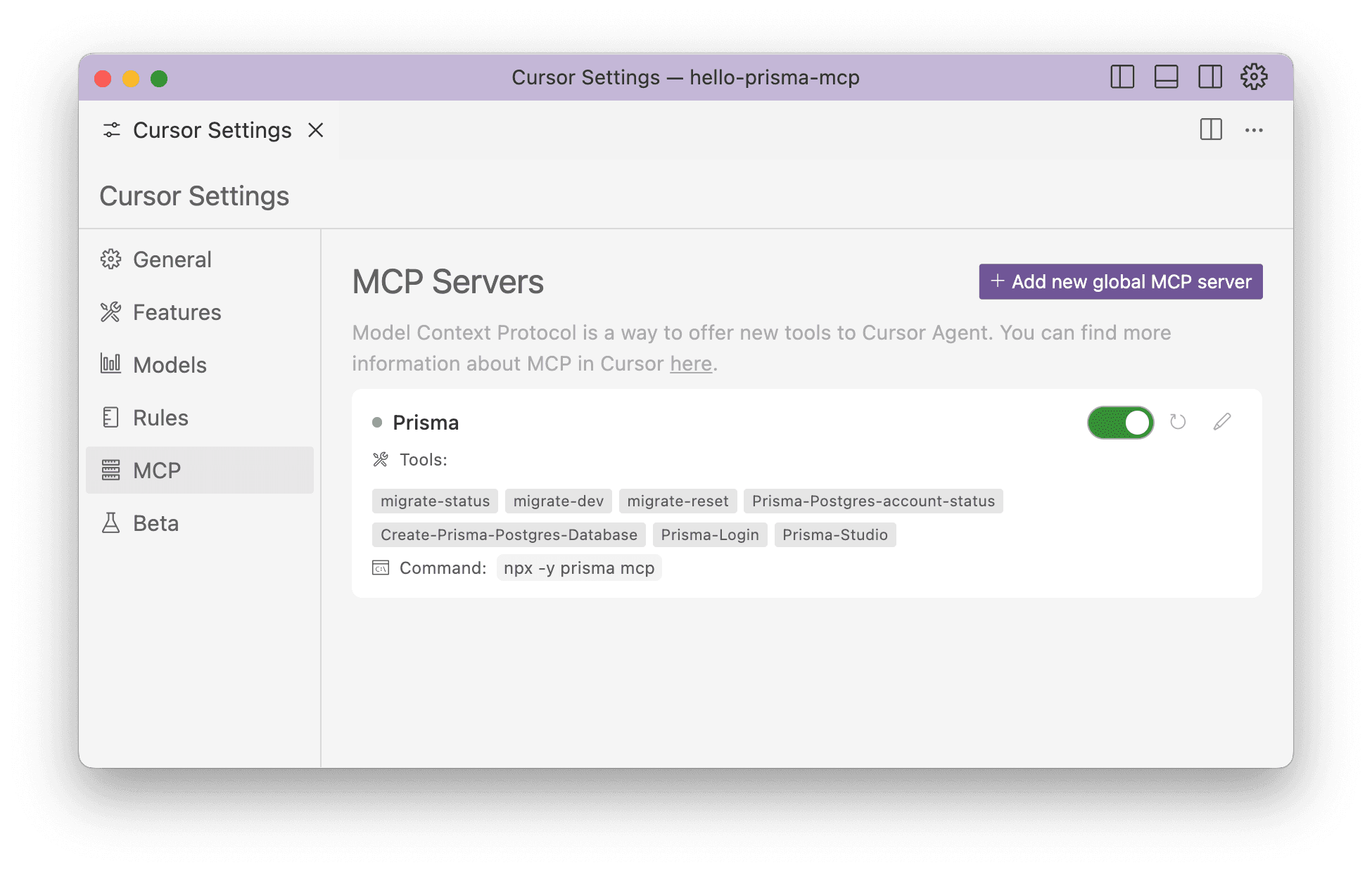

Once added, your AI tool will show the MCP server's status and available tools. Here's how it looks in Cursor:

Beyond MCP: New capabilities for Prisma users in VS Code

What's next? While MCP is powerful, it still requires manual setup in AI tools.

Our goal is to assist developers where they already are. VS Code is the de-facto standard for building web apps, and thanks to its free integration of GitHub Copilot and the LanguageModelTool API, we’re going to bring the capabilities of the MCP server to all Prisma VS Code extension users 🎉. This means Copilot will be capable of assisting you even more with your database workflows very soon!

Share your feedback with us

Have thoughts or questions about MCP, AI tools, or Prisma in general? Reach out on X or join our community on Discord — we'd love to hear from you!

Don’t miss the next post!

Sign up for the Prisma Newsletter